So far we have considered methods for estimating the value functions for a

policy given an infinite supply of episodes generated using that policy.

Suppose now that all we have are episodes generated from a different

policy. That is, suppose we wish to estimate ![]() or

or ![]() , but all we have

are episodes following

, but all we have

are episodes following ![]() , where

, where ![]() . Can we learn the value function

for a policy given only experience "off" the policy?

. Can we learn the value function

for a policy given only experience "off" the policy?

Happily, in many cases we can. Of course, in order to use episodes from ![]() to estimate values for

to estimate values for ![]() , we require that every action taken under

, we require that every action taken under ![]() is also taken, at least occasionally, under

is also taken, at least occasionally, under ![]() . That is, we require

that

. That is, we require

that ![]() implies

implies

![]() . In the episodes generated using

. In the episodes generated using ![]() , consider the

, consider the

![]() th first visit to state

th first visit to state ![]() and the complete sequence of states and actions

following that visit. Let

and the complete sequence of states and actions

following that visit. Let ![]() and

and

![]() denote the probabilities of that complete sequence happening given

policies

denote the probabilities of that complete sequence happening given

policies ![]() and

and ![]() and starting from

and starting from ![]() . Let

. Let ![]() denote the corresponding observed return from state

denote the corresponding observed return from state ![]() . To average

these to obtain an unbiased estimate of

. To average

these to obtain an unbiased estimate of ![]() , we need only weight each

return by its relative probability of occurring under

, we need only weight each

return by its relative probability of occurring under ![]() and

and ![]() , that

is, by

, that

is, by

![]() . The desired Monte Carlo estimate after observing

. The desired Monte Carlo estimate after observing ![]() returns from state

returns from state ![]() is then

is then

This equation involves the probabilities ![]() and

and ![]() ,

which are normally considered unknown in applications of Monte Carlo methods.

Fortunately, here we need only their ratio,

,

which are normally considered unknown in applications of Monte Carlo methods.

Fortunately, here we need only their ratio, ![]() , which can be determined with no knowledge of the environment's dynamics. Let

, which can be determined with no knowledge of the environment's dynamics. Let

![]() be the time of termination of the

be the time of termination of the ![]() th episode involving state

th episode involving state

![]() . Then

. Then

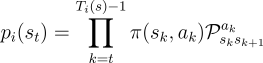

|

|

Exercise 5.3 What is the Monte Carlo estimate analogous to (5.3) for action values, given returns generated using