We now develop in detail one class of learning methods for function approximation in value prediction, those based on gradient descent. Gradient-descent methods are among the most widely used of all function approximation methods and are particularly well suited to reinforcement learning.

In gradient-descent methods, the parameter vector is a column vector with

a fixed number of real valued components,

![]() (the

(the ![]() here denotes

transpose), and

here denotes

transpose), and ![]() is a smooth differentiable function of

is a smooth differentiable function of

![]() for all

for all ![]() . For now, let us assume that on each step

. For now, let us assume that on each step ![]() , we

observe a new example

, we

observe a new example ![]() . These states might be

successive states from an interaction with the environment, but for now we do

not assume so. Even though we are given the exact,

correct values,

. These states might be

successive states from an interaction with the environment, but for now we do

not assume so. Even though we are given the exact,

correct values, ![]() for each

for each ![]() , there is still a difficult problem

because our function approximator has limited resources and thus limited

resolution. In particular, there is generally no

, there is still a difficult problem

because our function approximator has limited resources and thus limited

resolution. In particular, there is generally no ![]() that gets all the states,

or even all the examples, exactly correct. In addition, we must generalize to

all the other states that have not appeared in examples.

that gets all the states,

or even all the examples, exactly correct. In addition, we must generalize to

all the other states that have not appeared in examples.

We assume that states appear in examples with the same distribution, ![]() , over

which we are trying to minimize the MSE as given by (8.1). A good

strategy in this case is to try to minimize error on the observed examples.

Gradient-descent methods do this by adjusting the parameter vector after

each example by a small amount in the direction that would most reduce the

error on that example:

, over

which we are trying to minimize the MSE as given by (8.1). A good

strategy in this case is to try to minimize error on the observed examples.

Gradient-descent methods do this by adjusting the parameter vector after

each example by a small amount in the direction that would most reduce the

error on that example:

It may not be immediately apparent why only a small step is taken in the direction of the gradient. Could we not move all the way in this direction and completely eliminate the error on the example? In many cases this could be done, but usually it is not desirable. Remember that we do not seek or expect to find a value function that has zero error on all states, but only an approximation that balances the errors in different states. If we completely corrected each example in one step, then we would not find such a balance. In fact, the convergence results for gradient methods assume that the step-size parameter decreases over time. If it decreases in such a way as to satisfy the standard stochastic approximation conditions (2.7), then the gradient-descent method (8.2) is guaranteed to converge to a local optimum.

We turn now to the case in which the target output, ![]() , of the

, of the ![]() th

training example,

th

training example, ![]() , is not the true value,

, is not the true value,

![]() , but some approximation of it. For example,

, but some approximation of it. For example, ![]() might be a

noise-corrupted version of

might be a

noise-corrupted version of ![]() , or it might be one of the backed-up

values mentioned in the previous section. In such cases we cannot perform the

exact update (8.2) because

, or it might be one of the backed-up

values mentioned in the previous section. In such cases we cannot perform the

exact update (8.2) because ![]() is unknown, but we can

approximate it by substituting

is unknown, but we can

approximate it by substituting ![]() in place of

in place of ![]() . This yields

the general gradient-descent method for state-value prediction:

. This yields

the general gradient-descent method for state-value prediction:

For example, suppose the states in the examples are the states generated

by interaction (or simulated interaction) with the environment using policy ![]() .

Let

.

Let ![]() denote the return following each state,

denote the return following each state, ![]() . Because the true value

of a state is the expected value of the return following it, the Monte Carlo

target

. Because the true value

of a state is the expected value of the return following it, the Monte Carlo

target

![]() is by definition an unbiased estimate of

is by definition an unbiased estimate of ![]() . With this

choice, the general gradient-descent method (8.3) converges to a

locally optimal approximation to

. With this

choice, the general gradient-descent method (8.3) converges to a

locally optimal approximation to ![]() . Thus, the gradient-descent

version of Monte Carlo state-value prediction is guaranteed to find a

locally optimal solution.

. Thus, the gradient-descent

version of Monte Carlo state-value prediction is guaranteed to find a

locally optimal solution.

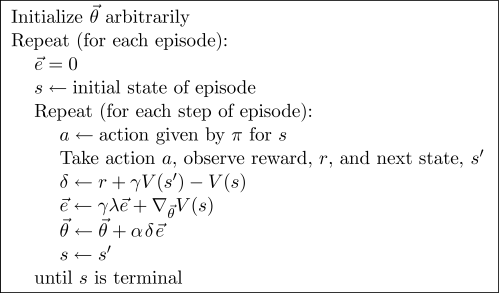

Similarly, we can use ![]() -step TD returns and their averages for

-step TD returns and their averages for ![]() . For

example, the gradient-descent form of TD(

. For

example, the gradient-descent form of TD(![]() ) uses the

) uses the ![]() -return,

-return,

![]() , as its approximation to

, as its approximation to ![]() , yielding the

forward-view update:

, yielding the

forward-view update:

As (8.4) provides the forward view of

gradient-descent TD(![]() ), so the backward view is provided by

), so the backward view is provided by

|

Two methods for gradient-based function approximation have been used widely in reinforcement learning. One is multilayer artificial neural networks using the error backpropagation algorithm. This maps immediately onto the equations and algorithms just given, where the backpropagation process is the way of computing the gradients. The second popular form is the linear form, which we discuss extensively in the next section.

Exercise 8.1 Show that table-lookup TD(

Exercise 8.2 State aggregation is a simple form of generalizing function approximation in which states are grouped together, with one table entry (value estimate) used for each group. Whenever a state in a group is encountered, the group's entry is used to determine the state's value, and when the state is updated, the group's entry is updated. Show that this kind of state aggregation is a special case of a gradient method such as (8.4).

Exercise 8.3 The equations given in this section are for the on-line version of gradient-descent TD(

Exercise 8.4 For off-line updating, show that equations ((8.5)-(8.7)) produce updates identical to (8.4).