Next: 6.6 Actor-Critic Methods

Up: 6. Temporal-Difference Learning

Previous: 6.4 Sarsa: On-Policy TD

Contents

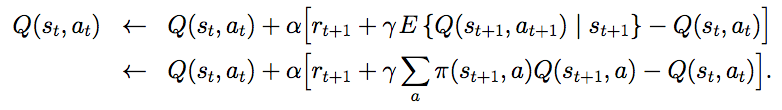

One of the most important breakthroughs in reinforcement learning was the

development of an off-policy TD control algorithm known as Q-learning

(Watkins, 1989). Its simplest form, one-step Q-learning, is defined by

| (6.6) |

In this case, the learned action-value function,  , directly approximates

, directly approximates

, the optimal action-value function, independent of the policy being followed.

This dramatically simplifies the analysis of the algorithm and enabled early

convergence proofs. The policy still has an effect in that it determines which

state-action pairs are visited and updated. However, all that is required for

correct convergence is that all pairs continue to be updated.

As we observed in Chapter 5, this is a minimal requirement in the sense that any

method guaranteed to find optimal behavior in the general case must require it.

Under this assumption and a variant of the usual stochastic approximation

conditions on the sequence of step-size parameters,

, the optimal action-value function, independent of the policy being followed.

This dramatically simplifies the analysis of the algorithm and enabled early

convergence proofs. The policy still has an effect in that it determines which

state-action pairs are visited and updated. However, all that is required for

correct convergence is that all pairs continue to be updated.

As we observed in Chapter 5, this is a minimal requirement in the sense that any

method guaranteed to find optimal behavior in the general case must require it.

Under this assumption and a variant of the usual stochastic approximation

conditions on the sequence of step-size parameters,  has been shown to

converge with probability 1 to

has been shown to

converge with probability 1 to  . The Q-learning algorithm is shown in

procedural form in Figure

6.12.

. The Q-learning algorithm is shown in

procedural form in Figure

6.12.

Figure

6.12:

Q-learning: An off-policy TD control algorithm.

|

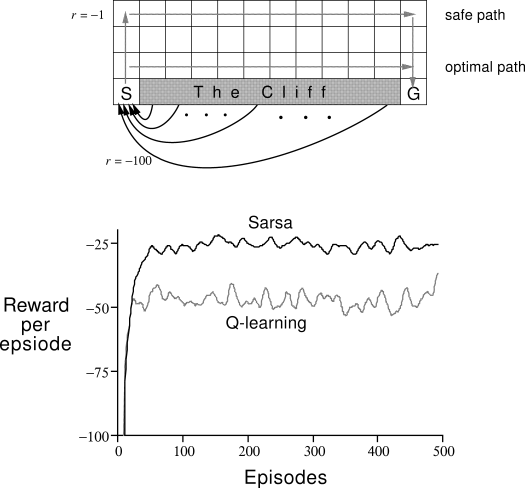

What is the backup diagram for Q-learning? The rule

(6.6) updates a state-action pair, so the top node, the root of

the backup, must be a small, filled action node. The backup is also from action nodes, maximizing over all those actions possible in the next

state. Thus the bottom nodes of the backup diagram should be all these action

nodes. Finally, remember that we indicate taking the maximum of these "next

action" nodes with an arc across them (Figure 3.7). Can you guess now

what the diagram is? If so, please do make

a guess before turning to the answer in

Figure

6.14.

Figure

6.13:

The cliff-walking task. The results are from a single run, but

smoothed.

|

Figure

6.14:

The backup diagram for Q-learning.

|

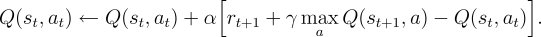

Example 6.6: Cliff Walking

This gridworld example compares Sarsa and Q-learning, highlighting the

difference between on-policy (Sarsa) and off-policy (Q-learning) methods.

Consider the gridworld shown in the upper part of Figure

6.13. This is

a standard undiscounted, episodic task, with start and goal states, and the

usual actions causing movement up, down, right, and left. Reward is  on all

transitions except those into the the region marked "The Cliff." Stepping into

this region incurs a reward of

on all

transitions except those into the the region marked "The Cliff." Stepping into

this region incurs a reward of  and sends the agent instantly back to the

start. The lower part of the figure shows the performance of the Sarsa and

Q-learning methods with

and sends the agent instantly back to the

start. The lower part of the figure shows the performance of the Sarsa and

Q-learning methods with  -greedy action selection,

-greedy action selection,  . After an initial

transient, Q-learning learns values for the optimal policy, that which travels

right along the edge of the cliff. Unfortunately, this results in its

occasionally falling off the cliff because of the

. After an initial

transient, Q-learning learns values for the optimal policy, that which travels

right along the edge of the cliff. Unfortunately, this results in its

occasionally falling off the cliff because of the  -greedy action selection.

Sarsa, on the other hand, takes the action selection into account and learns the

longer but safer path through the upper part of the grid. Although Q-learning

actually learns the values of the optimal policy, its on-line performance is worse

than that of Sarsa, which learns the roundabout policy. Of course, if

-greedy action selection.

Sarsa, on the other hand, takes the action selection into account and learns the

longer but safer path through the upper part of the grid. Although Q-learning

actually learns the values of the optimal policy, its on-line performance is worse

than that of Sarsa, which learns the roundabout policy. Of course, if  were

gradually reduced, then both methods would asymptotically converge to the

optimal policy.

were

gradually reduced, then both methods would asymptotically converge to the

optimal policy.

Exercise 6.9

Why is Q-learning considered an off-policy control method?

Exercise 6.10

Consider the learning algorithm that is just like Q-learning except

that instead of the maximum over next state-action pairs it uses the expected

value, taking into account how likely each action is under the current policy.

That is, consider the algorithm otherwise like Q-learning except with the update

rule

Is this new method an on-policy or off-policy method?

What is the backup diagram for this algorithm? Given the same amount of

experience, would you expect this method to work better or worse than Sarsa?

What other considerations might impact the comparison of this method with Sarsa?

Next: 6.6 Actor-Critic Methods

Up: 6. Temporal-Difference Learning

Previous: 6.4 Sarsa: On-Policy TD

Contents

Mark Lee

2005-01-04